Google’s E-E-A-T (Experience, Expertise, Authoritativeness, Trust) isn’t a single score you can export. It’s a set of quality principles that influence how people and platforms evaluate your content. The practical question for leaders and editors is simple: how do we measure progress without a magic metric or a step-by-step implementation? The answer is proxies—observable signals that correlate with E-E-A-T and help you manage quality like a program, not a vibe.

Below is a structured way to think about those proxies, grouped by outcome rather than by personae or tools. Use this as a north star for roadmapping, prioritization, and reporting—no instrumentation walkthroughs, just what to watch and why it matters.

1) Experience: “Have you actually done this?”

What it means

Experience is the “been there, did the work, saw the result” layer. It shows up when content reflects real-world use, field testing, or first-hand practice.

Proxies to watch

- First-hand artifacts

- Original photos, screenshots, data plots, teardown images, or lab notes cited in the piece.

- Quote density from your own trials, interviews, or experiments (not syndicated).

- Outcome specificity

- Concrete ranges, constraints, trade-offs (“battery dropped 18–22% during 4K recording” vs. “battery life decreases”).

- “What went wrong” sections, edge-case callouts, or failure modes documented.

- Reader feedback patterns

- Comments and emails that reference doing, not just reading (“followed your method and hit X result”).

- Recurrent questions that assume credibility (“what did you use to measure…?”).

How to use the proxies

Weight content that contains first-hand artifacts and outcome specificity higher in prioritization. Pieces that generate “doing-based” feedback are likelier to reflect genuine experience.

2) Expertise: “Do you demonstrably know the subject?”

What it means

Expertise isn’t job title; it’s the depth and correctness of explanation, the ability to resolve ambiguity, and the consistency with accepted primary sources.

Proxies to watch

- Primary-source alignment

- Citations to standards, white papers, regulatory docs, or foundational studies (not lifestyle listicles).

- Fewer unsupported claims; fewer corrections over time.

- Concept density without jargon creep

- High ratio of specific, domain-correct terms to buzzwords—and correct use in context.

- Peer scrutiny

- SME reviews (editorial notes logged), acknowledgments of uncertainties or competing viewpoints.

- Reader comprehension signals

- Lower “thin bounce” on complex topics; steady active time for long explainers; fewer “what does X mean?” comments across releases.

How to use the proxies

Treat primary-source alignment and peer scrutiny as gating criteria for topics where accuracy matters (finance, health, compliance). Comprehension signals validate that expertise is digestible.

3) Authoritativeness: “Do others rely on you?”

What it means

Authority grows when your content becomes a reference point—linked, quoted, or chosen over alternatives—especially by credible sources.

Proxies to watch

- Quality citations in the wild

- Mentions and links from recognized institutions, industry bodies, reputable media, or respected creators (not link farms).

- Branded demand growth

- Increases in branded searches around your name + topic (“YourBrand schema guide”), direct visits to resources, inclusion in resource lists.

- Selective inclusion

- Invitations to contribute definitions, frameworks, or viewpoints in others’ work; frequent inclusion in roundups where editors curate, not aggregate.

- Cross-cluster influence

- Your pages become landing points for adjacent topics (“people start here” patterns in session paths).

How to use the proxies

Weigh independent, high-quality citations more than raw mention counts. Branded demand growth validates that your authority is sticky and self-reinforcing.

4) Trust: “Should I believe you—and is it safe to act on your advice?”

What it means

Trust blends transparency, safety, and consistency. For many verticals, it’s the make-or-break dimension.

Proxies to watch

- Identity and accountability clarity

- Author pages with real bios, qualifications, affiliations, and update histories; visible editorial standards.

- Corrections culture

- Public changelogs, dated updates, and visible errata; low rate of substantive corrections post-publish.

- Risk disclosures

- Clear limitations, contraindications, or “do not attempt” notes where appropriate; links to official guidance when actionable risk exists.

- Audience protection signals

- Absence of manipulative patterns (fake scarcity, deceptive design); transparent affiliate disclosures; consistent privacy posture across properties.

How to use the proxies

Trust proxies are table stakes. If identity, corrections, or disclosures are weak, progress in other pillars won’t offset the signal loss.

5) Cohesion across the four pillars: signals that compound

Some proxies reflect multiple pillars at once—and therefore have outsized value.

- Methodologically sound original research

First-hand (experience), accurate (expertise), cited by others (authority), and transparent about limitations (trust). - Expert-authored, editor-reviewed explainers with field photos and a dated update log

One asset, four pillars aligned.

Prioritize work that checks multiple boxes; these pieces tend to become evergreen references.

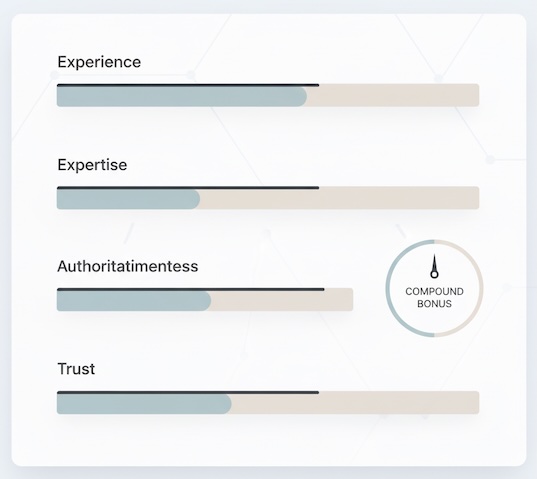

A practical scoring lens (no tooling required)

To structure editorial planning and stakeholder reporting, classify each piece or cluster with a lightweight rubric. Keep it directional, not forensic.

- Experience (0–3): 0 = commentary only; 3 = first-hand artifacts + failure modes + quantified outcomes.

- Expertise (0–3): 0 = generic claims; 3 = primary-source aligned + SME reviewed + competing views acknowledged.

- Authority (0–3): 0 = no credible citations; 3 = referenced by recognized sources + branded demand up.

- Trust (0–3): 0 = anonymous, undisclosed; 3 = full authorship, disclosures, update logs, and user-safe patterns.

Add a Compound Bonus (+0–2) for assets that clearly span pillars (e.g., original research paper). You’re not chasing precision—you’re creating a common language to rank roadmap items and show improvement over time.

Portfolio diagnostics: where E-E-A-T breaks down

Use patterns, not single datapoints:

- High traffic, low citations: Authority gap—content is discoverable but not reference-worthy. Strengthen primary-source alignment and unique insights.

- Deep engagement, frequent corrections: Expertise gap—compelling but error-prone; tighten SME review loops.

- Strong citations, weak reader trust: Trust gap—perhaps dated pieces, thin disclosures, or aggressive monetization patterns.

- Great guides, no evidence of doing: Experience gap—add first-hand trials, original visuals, quantified results.

These diagnoses guide editorial briefs, not just edits.

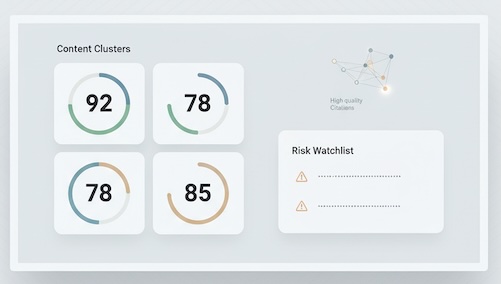

Reporting E-E-A-T to leadership (without mysticism)

Make the quality story legible:

- One-page “Quality Barometer”

- Average pillar scores by content cluster (how-to, comparisons, research).

- Notable gains/losses quarter-over-quarter with a sentence of why.

- Top 10 reference wins

- New high-cred citations and what they referenced (e.g., methodology graphic, dataset, definition).

- Risk & reliability watchlist

- Pages with rising correction rates or trust concerns; planned editorial remedies.

- Compound assets pipeline

- Upcoming pieces likely to score “multi-pillar”—research, benchmarks, expert panels.

This reframes E-E-A-T from folklore to governance: objectives, evidence, and trade-offs.

Common pitfalls when chasing E-E-A-T

- Counting everything equally. A link from a scraper ≠ a citation from a standards body. Weight by credibility.

- Confusing length with depth. Longer isn’t more expert; clarity and specificity are.

- Hiding uncertainty. Trust grows when you state limits and link to official guidance where action risk exists.

- Optimizing for vanity feedback. Applause on social can be divorced from authority or expertise signals.

The takeaway

You can’t export an “E-E-A-T score,” but you can manage quality with proxies. Track first-hand artifacts and outcome specificity for Experience, primary-source alignment and comprehension for Expertise, credible citations and branded demand for Authority, and visible identity, corrections, and disclosures for Trust. Use a simple rubric to prioritize work and a concise barometer to report progress.

Do that consistently, and E-E-A-T stops being a buzzword. It becomes a backbone for editorial decisions—and a defensible narrative for why your content deserves to rank and be read.